REGRESSION TECHNIQUES IN MACHINE LEARNING

Machine learning has become the sexiest and very trendy technology in this world of technologies, Machine learning is used every day in our life such as Virtual assistance, for making future predictions, Videos surveillance, Social media services, spam mail detection, online customer support, search engine resulting prediction, fraud detection, recommendation systems, etc. In machine learning, Regression is the most important topic that needed to be learned. There are different types of Regression techniques in machine learning, which we will know in this article.

Introduction:

Regression algorithms such as Linear regression and Logistic regression are the most important algorithms that people learn while they study Machine learning algorithms. There are numerous forms of regression that are used to perform regression and each has its own specific features, that are applied accordingly. The regression techniques are used to find out the relationship between the dependent and independent variables or features. It is a part of data analysis that is used to analyze the infinite variables and the main aim of this is forecasting, time series analysis, modelling.

What is Regression techniques in Machine Learning?

Regression is a statistical method that mainly used for finance, investing and sales forecasting, and other business disciplines that make attempts to find out the strength and relationship among the variables.

There are two types of the variable into the dataset for apply regression techniques:

- Dependent Variable that is mainly denoted as Y

- Independent variable that is denoted as x.

And, There are two types of regression

- Simple Regression: Only with a single independent feature /variable

- Multiple Regression: With two or more than two independent features/variables.

Indeed, in all regression studies, mainly seven types of regression techniques are used firmly for complex problems.

- Linear regression

- Logistics regression

- Polynomial regression

- Stepwise Regression

- Ridge Regression

- Lasso Regression

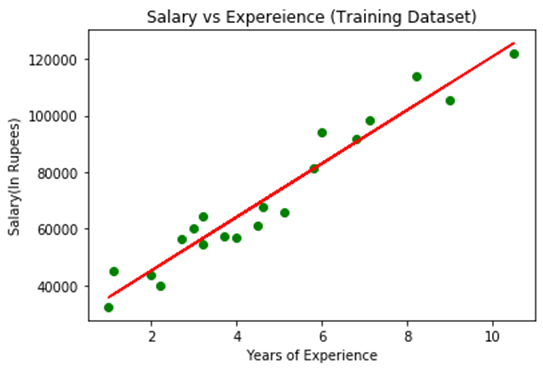

Linear regression techniques in Machine Learning:

It is basically used for predictive analysis, and this is a supervised machine learning algorithm. Linear regression is a linear approach to modelling the relationship between scalar response and the parameters or multiple predictor variables. It focuses on the conditional probability distribution. The formula for linear regression is Y = mX+c.

Where Y is the target variable, m is the slope of the line, X is the independent feature, and c is the intercept.

Additional points on Linear regression:

- There should be a linear relationship between the variables.

- It is very sensitive to Outliers and can give a high variance and bias model.

- The problem of occurring multi colinearity with multiple independent features

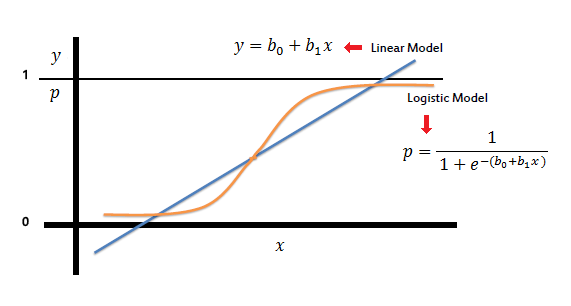

Logistic regression techniques in Machine Learning:

It is used for classification problems with a linear dataset. In layman’s term, if the depending or target variable is in the binary form (1 0r 0), true or false, yes or no. It is better to decide whether an occurrence is possibly either success or failure.

Additional point:

- It is used for classification problems.

- It does not require any relation between the dependent and independent features.

- It can after by the outliers and can occur underfitting and overfishing.

- It needs a large sample size to make the estimation more accurate.

- It needs to avoid collinearity and multicollinearity.

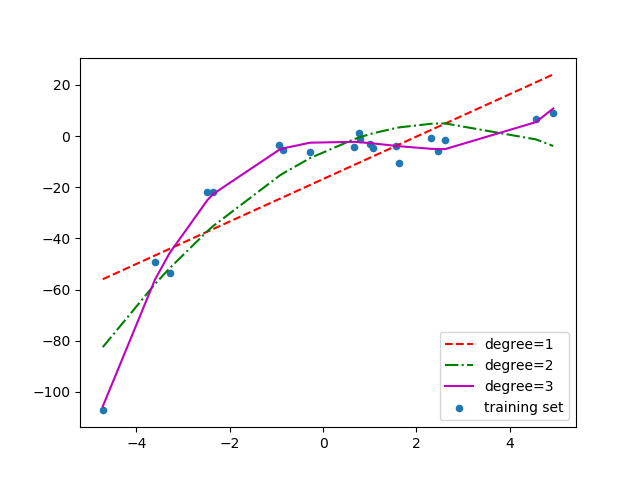

Polynomial Regression:

Step-wise Regression techniques in Machine Learning:

- This regression provides two things, the very first one is to add prediction for each steep and remove predictors for each step.

- It starts with the most significant predictor into the ML model and then adds features for each step.

- The backward elimination starts with all the predictors into the model and then removes the least significant variable.

Ridge Regression:

Additional points:

- In this regression, normality is not to be estimated the same as Least squares regression.

- In this regression, the value could be varied but doesn’t come to zero.

- This uses the l2 regularization method as it is also a regularization method.

Lasso Regression:

Lasso is an abbreviation of the Least Absolute shrinkage and selection operator. This is similar to the ridge regression as it also analyzes the absolute size of the regression coefficients. And the additional features of that are it is capable of reducing the accuracy and variability of the coefficients of the Linear regression models.

- Lasso regression shrinks the coefficients aero, which will help in feature selection for building a proper ML model.

- It is also a regularization method that uses l1 regularization.

- If there are many correlated features, it picks only one of them and shrinks it to zero.

Learnbay data science course covers Data Science with Python, Artificial Intelligence with Python, Deep Learning using Tensor-Flow. These topics are covered and co-developed with IBM.

Comments

Post a Comment